In a billing application that generates invoices and manages payments, reliability, consistency, and fault tolerance are paramount. The system must ensure that all business-critical actions – such as generating invoices, processing payments, and updating balances – are reliably executed and communicated, even in the face of network failures, system crashes, or temporary service disruptions.

When messages fail, they need to be retried, but depending on the architecture of the system, this can become a manual and error-prone process. In many traditional message systems the burden of retrying or fixing failed messages falls on the developers or system administrators. While this approach does ensure no messages are lost, it requires active management and monitoring.

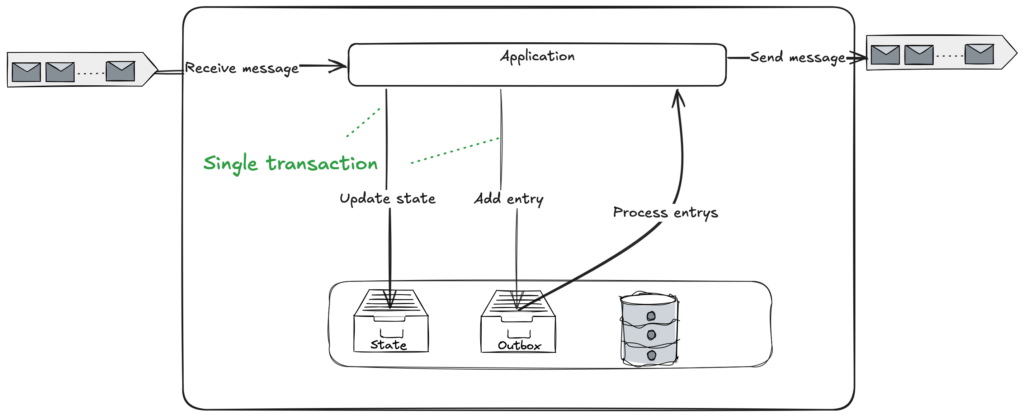

To ensure resilient message processing and to reduce the mentioned manual overhead, at Nitrobox we implement the Outbox Pattern at critical points. It plays a vital role in ensuring that events are safely and consistently delivered to other microservices and external systems, such as notification services, payment providers, and accounting systems, by using a single transaction where all actions are executed together or not at all.

Inhaltsverzeichnis

The Challenge of Message Failures in Distributed Systems

Common Causes of Message Failures in Microservices Communication

In our message-based system, messages are sent from one service to another via a message broker like ActiveMQ or Azure ServiceBus. Sometimes, these messages fail for various reasons, such as:

Network issues: Temporary connectivity problems between services.

Service unavailability: The destination service might be down.

Business logic errors: The receiving service might reject the message due to some validation failure.

System overload: The message queue or service might be too overwhelmed to process the message at that moment.

Dead Letter Queue (DLQ) – Why It’s Not Always Enough

Once a message ends up in the DLQ, someone needs to:

Inspect the message: Understand why it failed.

Fix the issue: This could involve fixing system bugs, resolving service unavailability, correcting the message itself or in the worst case fixing inconsistencies

Retry the message: Once the issue is resolved, the message has to be manually placed back into the main queue or retried.

This process is time-consuming, error-prone, and can cause delays in the overall system’s performance. Depending on how critical the message is, delays can lead to significant business or technical issues.

Another even more severe problem arises when an event is sent as a result of a state change: the event might be sent before the state change is persisted in the database, or vice versa. If something goes wrong after one part of the operation but before the other, you’ll be left with either:

An updated state with no corresponding event to notify other parts of the system.

An event emitted but with no state update, leading to inconsistencies when other services or systems read the state.

What is the Outbox Pattern? A Key Event-Driven Architecture Pattern

The Outbox Pattern is a technique that ensures reliable event delivery between services, particularly in systems where a service needs to publish an event (such as an invoice created event) after completing a local transaction (like generating an invoice in a billing system)

Here’s how it works:

1. Message Persistence with the Outbox Table

- Instead of sending messages directly to the message broker, the service writes the message to a special Outbox Table in its local database. This ensures that the message is durable and won’t be lost if there is a failure during transmission. The Outbox Table serves as a staging area for messages to be sent.

2. Ensuring Transactional Integrity with Atomic Transactions

To ensure that messages are only sent if the business operation succeeds, the process of writing the message to the Outbox Table is part of the same transaction as the business operation. For example, if a service is processing an order and needs to send a message to another service, the order is saved in the main table, and the message is simultaneously written to the Outbox Table within the same transaction.

3. Automated Message Processing and Retries for Reliability

- A background worker (or a similar mechanism) periodically scans the Outbox Table for unsent messages. When a message is detected, the worker sends it to the message broker and marks the message as successfully sent in the Outbox Table.

4. Automatic Retries in Case of Failure for Reliability

If the message fails to be sent (due to temporary issues like network failures, service unavailability, etc.), the worker will simply retry sending the message. This retry mechanism can be configured with exponential backoff or other strategies to avoid overwhelming the system. The retries happen automatically, which is the key benefit, as it removes the requirement for human intervention.

5. Guaranteed Delivery

Since the message is written to the Outbox Table before the attempt to send it, and the retries are automatic, the Outbox Pattern guarantees eventual consistency without needing to manually intervene with a DLQ. This means that once the issues are resolved (such as service restoration or network repair), the message will eventually be delivered without any manual effort

When to Use the Outbox Pattern in Microservices Messaging

Outbox Pattern vs. Event Sourcing – Which One to Use?

While the Outbox Pattern offers many advantages, you have to keep in mind that it’s not always the best fit for every scenario. It is especially useful when:

Atomic operations across services: You need to ensure that actions (like sending a message) happen only if the underlying business transaction is successful.

Automatic retries: You want to avoid manual intervention in retrying failed messages, particularly in high-throughput systems.

Eventual consistency: You can tolerate eventual consistency between services, where messages may be delayed but will eventually be processed.

On the other hand, for systems with extremely high message volume or where messages must be delivered with strict immediacy, other patterns like event sourcing might be more appropriate.

Implementing the Outbox Pattern in Spring Boot

How Nitrobox Uses the Outbox Pattern for Reliable Messaging

At Nitrobox we implemented spring boot starter libraries which provide the outbox functionality for services using MySQL or MongoDB. The starter supports multiple outboxes and is highly configurable.

In addition there is also a library that provides API endpoints to maintain outbox entries and a library intended to be used when sending AMQP messages.

Module | Description |

|---|---|

core | The core functionality of the outbox |

mysql | MySQL implementation |

mongodb | MongoDB implementation |

mvc | Provides a controller with endpoints to maintain outbox entries |

amqp | Provides auto configured spring beans to make it easier to use the outbox starter when sending AMQP messages |

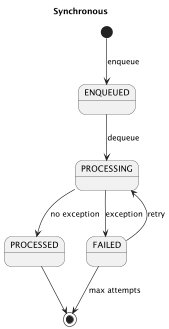

Status Model

For the outbox entries we are using the following status model

ENQUEUED– the outbox entry has been stored in the outbox and will be processedPROCESSING– the outbox entry is currently being processedPROCESSED– the outbox entry has been successfully processedFAILED– processing of the outbox entry failed (e.g. exception has been thrown)

The status model is crucial for ensuring the correct processing of events. By incorporating this model, each entry in the outbox can be tracked through its lifecycle, allowing for the accurate recording of timestamps whenever there are status changes. This not only guarantees that the events are processed correctly but also provides an audit trail that can be useful for troubleshooting and monitoring. By tracking the status and timestamps, developers can easily pinpoint where a failure may have occurred and maintain better control over the event flow.

Our Configuration for Outbox Processing

The outbox needs to provide the flexibility to configure the retry of failed entries, prevent the overloading of receiving systems and offer the possibility to delete entries that have been successfully processed after a certain period of time.

Therefore the following configuration properties are available

| Property | Description |

|---|---|

| expireAfter | Time after which PROCESSED entries are deleted |

| circuit-breaker | Optional circuit breaker config which is used when ENQUEUED entries are automatically dequeued and when FAILED entries are retried. See CircuitBreaker |

| ignoreExceptions | List of classes of exceptions which are ignored. If a configured exception is thrown when processing an entry, the entry will not be retried and deleted after expireAfter. Ignored exceptions are also not registered as failure by the circuit breaker. Note: The entry will still be marked as FAILED. |

| retry.maxAttempts | Max attempts. Including the initial call as the first attempt. |

| retry.processingStaleAfter | Time after which PROCESSING entries are retried. Assuming the processing of the entry was aborted (e.g. due to application shutdown). If set to 0, entries in state PROCESSING are not retried. |

| retry.backoff.initialInterval | Initial interval of the retry backoff strategy. |

| retry.backoff.multiplier | Multiplier of the retry backoff strategy. |

| retry.backoff.maxInterval | Max interval of the retry backoff strategy. |

| retry.schedule.trigger.interval | Periodic trigger interval of the retry job. The periodic interval is measured between actual completion times (fixed-delay). |

| retry.schedule.trigger.initialDelay | Initial delay after application start of the retry job. |

| retry.schedule.lock.atMostFor | Shedlock configuration of the retry job. The lock is held until this duration passes, after that it's automatically released (the process holding it has most likely died without releasing the lock). |

| retry.schedule.lock.atLeastFor | Shedlock configuration of the retry job. The lock will be held at least this duration even if the task holding the lock finishes earlier. |

An example configuration in application.yaml might look like:

outbox:

outboxes:

InboxDocumentProvisioned:

expireAfter: 30d

circuit-breaker:

slidingWindowSize: 100

minimumNumberOfCalls: 10

permittedNumberOfCallsInHalfOpenState: 3

slowCallDurationThreshold: PT15S

retry:

maxAttempts: 5

processingStaleAfter: 10m

backoff:

initialInterval: 1m

multiplier: 1.5

maxInterval: 5m

schedule:

trigger:

interval: 30s

initialDelay: 30s

lock:

atMostFor: 10m

atLeastFor: 10s

o avoid having to define all these options every time we use an outbox, some predefined templates are provided:

default – General multipurpose configuration

web – Configuration intented to be used for outboxes sending HTTP requests

amqp – Configuration intended to be used for outboxes sending AMQP messages

Predefined properties can be overridden by defining them explicitly.

outbox:

outboxes:

OutboxDefault:

type: default

expireAfter: P50D

Conclusion

Now that we’ve covered how to configure the Outbox Spring Boot Starter, you should have a good understanding of what the Outbox pattern can do and how it fits into your event-driven architecture. In the next blog post, we’ll dive into the actual implementation of the Outbox Starter. Stay tuned to see how to put all this into action!